Deploying an EKS cluster with Flatcar workers

Amazon Elastic Kubernetes Service (EKS) is a fully automated Kubernetes cluster service on Amazon Web Services (AWS). Flatcar Container Linux is a self-updating operating system designed for containers, making it ideal for running Kubernetes and other container platforms. It is suitable for anyone deploying containerized applications, particularly those seeking to do so securely or at scale.

Because it's container optimized, Flatcar Container Linux is already being used as the OS of choice for EKS workers by companies around the world.

Until recently, joining a Flatcar instance to the EKS cluster required some complex manual configuration. With our latest 2605.12.0 Stable release available in the AWS Marketplace, you can deploy Flatcar Container Linux Pro on your EKS workers and have them join your cluster with minimal configuration.

There are a lot of different ways of adding workers to your EKS cluster. In this post, we'll show you how you can do it using Launch templates through the web console and the AWS CLI, and also how to create your cluster with Terraform and eksctl.

But no matter how you decide to do it, there are a few things that are the same across all of them. We'll start with those.

Subscribing to the Flatcar Container Linux Pro offer

Regardless of how you choose to deploy your workers, you'll need to subscribe to the Flatcar Container Linux Pro offer in the AWS Marketplace.

This needs to be done only once per organization. After that, you'll be able to deploy as many instances as you want with whatever method you prefer.

Subscribing to the offer enables your organization to deploy the Flatcar Container Linux Pro images distributed through the AWS Marketplace. You'll only be charged for the hours of actual use, so until you deploy the images this won't mean any extra costs.

Worker IAM Role

When launching EKS workers, we need to assign an IAM Role to them, which gives them access to the AWS resources they need. For our Flatcar Container Linux Pro workers we'll need to create a role that includes the following policies:

AmazonEKSWorkerNodePolicyAmazonEKS_CNI_PolicyAmazonEC2ContainerRegistryReadOnlyAmazonS3ReadOnlyAccess

See AWS's IAM documentation on how to create an IAM role for a step by step guide on how to do this.

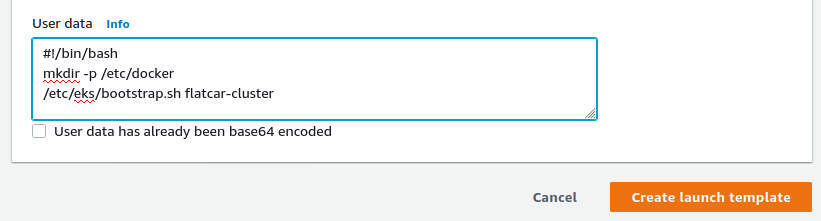

User data

In order for your Flatcar workers to join the cluster, you'll need to set a short script like this one as the user-data:

#!/bin/bash

mkdir -p /etc/docker

/etc/eks/bootstrap.sh <cluster-name>

Where should you set that script? Well, that depends on how you're spinning up your workers. In the rest of this post, we'll look at the following methods:

- Creating Launch Templates in the Web console

- Creating Launch Templates with the AWS CLI

- Using Terraform to provision the whole cluster

- Using eksctl to provision the whole cluster

Using Launch Templates

Using EC2 Launch Templates to manage your EKS Node Groups allows you to specify the configuration for the nodes that you'll be adding to your cluster, including which AMIs they should run and any additional configuration that you want to set.

For this example, we'll assume there's already an active EKS cluster, to which we'll add worker nodes running Flatcar Container Linux Pro. There are a bunch of different ways to spin up the cluster, which we won't cover here. Check out Amazon's documentation on how to get your EKS cluster ready.

When creating the cluster, note that to interact with Kubernetes later on, the user that created the cluster needs to be the same that calls kubectl, at least the first time, so make sure you do this with a user that has CLI credentials.

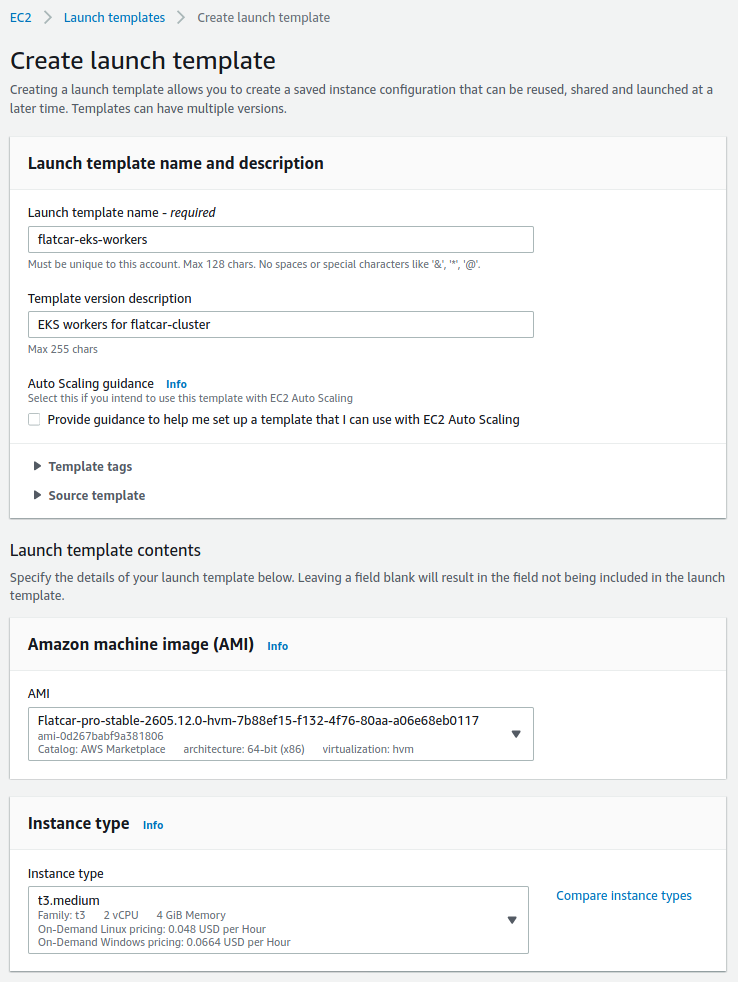

Creating the Launch Template

We'll create an EC2 Launch Template that will include all the customization options that we want to set for our Flatcar nodes. We'll start by giving the template a name and description, and then selecting the AMI and instance type that we want.

To find the latest Flacar Pro AMI, we simply typed "Flatcar Pro” in the search box and selected the latest version available from the AWS Marketplace.

We've chosen t3.medium as the instance type for this example. You can select the instance type that suits your needs best.

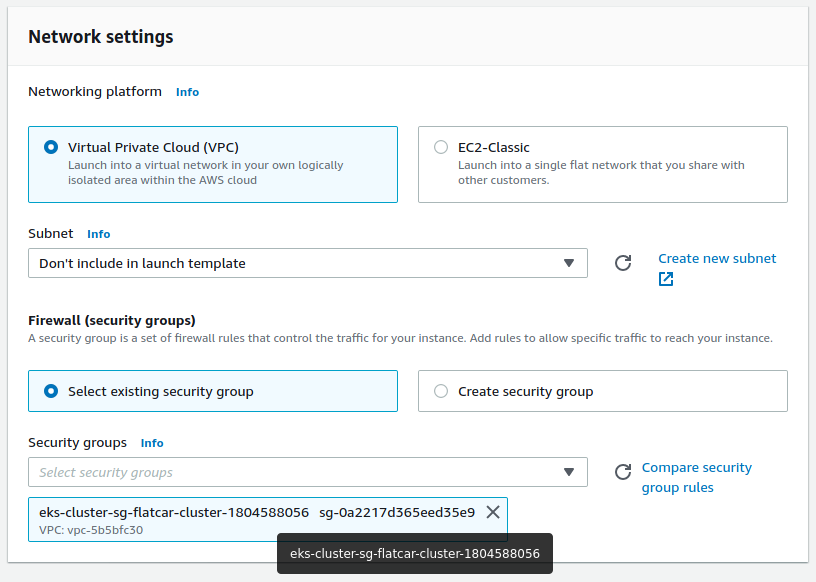

Next, we'll need to set the right security group. To do that, we'll use the group that was automatically created when creating the eks cluster, which follows the pattern eks-cluster-sg-<cluster-name>.

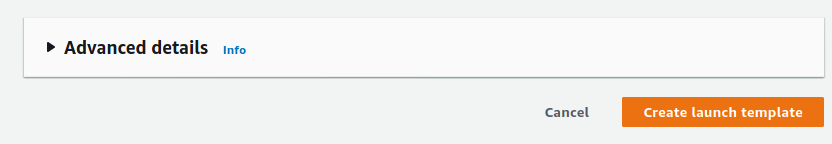

And finally, we'll expand the advanced details section and input the necessary userdata.

You can copy and paste this snippet, replacing <cluster-name> with the name of the cluster to which your workers should be added:

#!/bin/bash

mkdir -p /etc/docker

/etc/eks/bootstrap.sh <cluster-name>

Optionally, you may also want to select an SSH key. This would allow you to connect to your workers through SSH if you want to debug your setup, but it's not necessary for running Kubernetes workloads.

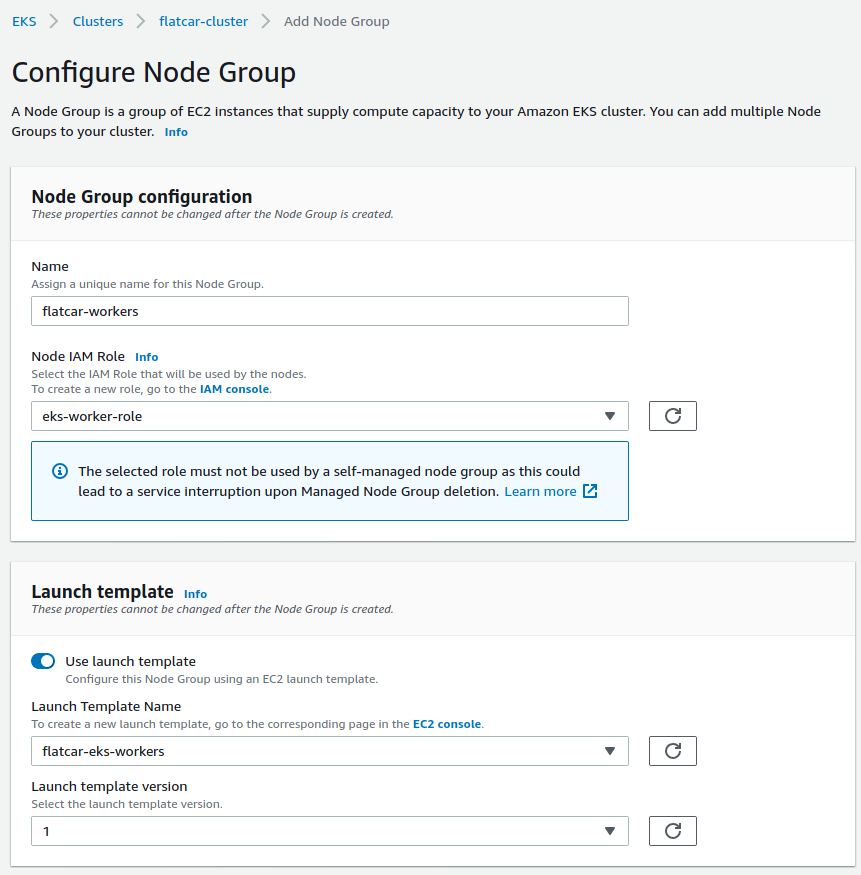

Adding the Node Group

Once we've created our Launch Template, we're ready to add a managed Node Group to our EKS cluster using this template.

We'll find our cluster in the EKS cluster list, go to the Configuration tab, and in the Compute tab click the "Add Node Group” button. This will prompt us to configure the Node Group that we want to add.

We'll select the worker role that we had created for these nodes as well as the launch template that we've setup. For the rest of the settings, we can leave the default values, or change them as we see fit. We can now launch our Flatcar nodes!

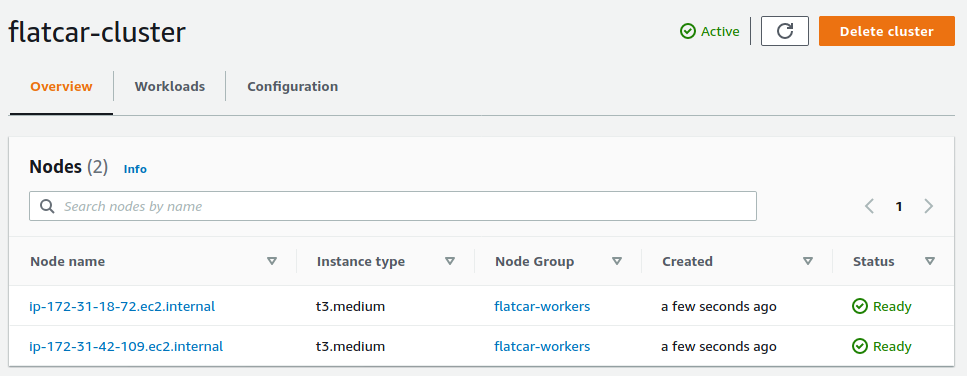

They'll take a minute or two to be ready. You can check in the Overview window of your cluster.

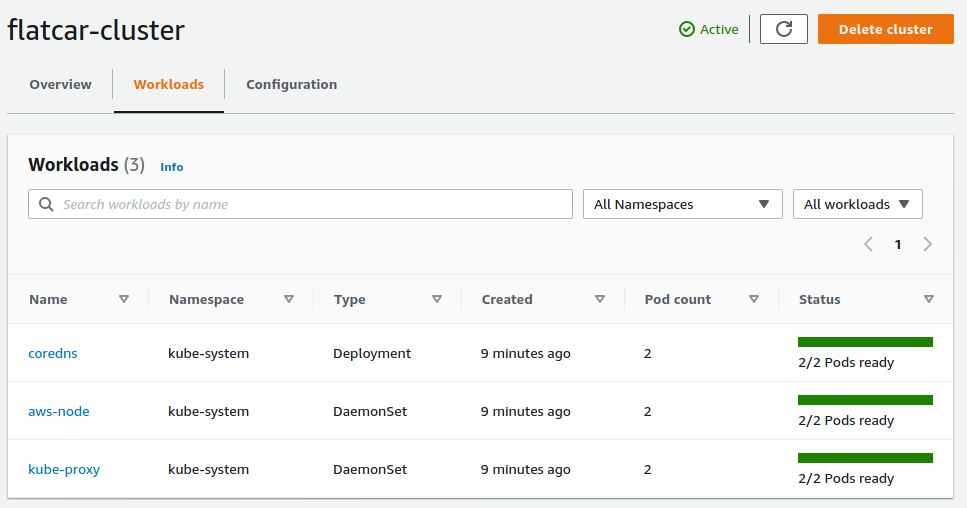

Once the nodes are ready, we can check out the Kubernetes workloads running.

Using the AWS CLI

As an alternative to the web console, we can follow similar steps through the AWS command line tool. Remember that to use this tool you need to have access to the AWS credentials of the account you want to use, either through the config file or through environment variables.

Using this tool means that we need to find out some values that the web console looked up for us when filling in the templates. It's possible to obtain these values in several ways, we'll show just one possibility here.

We'll start by fetching the identifier of the latest Flatcar Pro AMI available in the marketplace. We can use a command like this one:

$ aws ec2 describe-images \

--owners aws-marketplace \

--region us-east-1 \

--filters "Name=name,Values=Flatcar-pro-stable*" \

--query 'sort_by(Images, &CreationDate)[-1].[ImageId,Name,CreationDate]'

Next, we'll find out the security group that needs to be assigned to our nodes, which was created when creating the EKS cluster. We can look it up with a command like this one:

$ aws ec2 describe-security-groups \

--region us-east-1 \

--filter "Name=group-name,Values=eks-cluster-sg-*" \

--query 'SecurityGroups[*].[GroupName,GroupId]'

Finally, we'll need to encode our user-data script with the base64 command. Like this:

base64 -w 0 <<EOF; echo

#!/bin/bash

mkdir -p /etc/docker

/etc/eks/bootstrap.sh flatcar-cluster

EOF

We now have all the values we need for the launch template. We'll create a file called launch-template-flatcar.json, with these contents:

{

"LaunchTemplateData": {

"ImageId": "ami-0e06fb77ead4c2691",

"InstanceType": "t3.medium",

"UserData": "IyEvYmluL2Jhc2gKbWtkaXIgLXAgL2V0Yy9kb2NrZXIKL2V0Yy9la3MvYm9vdHN0cmFwLnNoIGZsYXRjYXItY2x1c3Rlcgo=",

"SecurityGroupIds": [

"sg-08cf06b2511b7c0a5"

]

}

}

And then create the template in EC2 with:

$ aws ec2 create-launch-template \

--launch-template-name flatcar-eks-workers \

--version-description "EKS workers for flatcar-cluster" \

--cli-input-json file://./launch-template-flatcar.json

Once the launch template is created, we can start our nodes. To do that, we'll look up the subnets associated with our EKS cluster, which we can do with a command like this:

$ aws ec2 describe-subnets \

--region us-east-1 \

--filter "Name=tag-key,Values=kubernetes.io/cluster/flatcar-cluster" \

--query 'Subnets[*].SubnetId'

To start the nodes, we'll need to pass the role that we created at the beginning, assigning the mentioned permissions. We have to use the ARN format for the role (e.g. arn:aws:iam::012345678901:role/eks-worker-role). With that, we have all the information to start our nodes, like this:

$ aws eks create-nodegroup \

--cluster-name flatcar-cluster \

--nodegroup-name flatcar-workers \

--region us-east-1 \

--subnets subnet-4b4ded36 subnet-138de578 subnet-5a6ce217 \

--node-role 'arn:aws:iam::012345678901:role/eks-worker-role' \

--launch-template name=flatcar-eks-workers

This will create the instances and add them to our cluster. After a couple of minutes, we'll be able to interact with the cluster using kubectl.

To do that, we'll need to generate a kubeconfig that connects to the cluster. There are a few different ways to do this. For example, we can call:

$ aws eks update-kubeconfig \

--region us-east-1 \

--name flatcar-cluster \

--kubeconfig ./kubeconfig_flatcar-cluster

This will create a kubeconfig file that we can pass to kubectl for connecting to the cluster. Like this:

$ kubectl --kubeconfig kubeconfig_flatcar-cluster get pods -A

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system aws-node-56mfs 1/1 Running 0 30s

kube-system aws-node-7zng9 1/1 Running 0 30s

kube-system coredns-59b69b4849-4s9gq 1/1 Running 0 29m

kube-system coredns-59b69b4849-p7bml 1/1 Running 0 29m

kube-system kube-proxy-7d8ld 1/1 Running 0 30s

kube-system kube-proxy-lhz9h 1/1 Running 0 30s

Using Terraform

If you use Terraform to manage your AWS infrastructure, you can also create your Flatcar EKS workers with it. Our repository of terraform examples includes fully working examples of how to do just that.

No matter how you're configuring your infrastructure, you'll need to find out the AMI for the latest version of Flatcar Pro. You can do this with a snippet like this one:

data "aws_ami" "flatcar_pro_latest" {

most_recent = true

owners = ["aws-marketplace"]

filter {

name = "architecture"

values = ["x86_64"]

}

filter {

name = "virtualization-type"

values = ["hvm"]

}

filter {

name = "name"

values = ["Flatcar-pro-stable-*"]

}

}

The official terraform-aws-modules/eks/aws module takes care of most of the work related to EKS clusters. Using this module, it's really easy to use Flatcar for the EKS workers. We simply need to select the AMI, pass the additional policy to the worker role and the additional pre_userdata. For example, we could do that with a snippet like this one:

module "eks" {

source = "terraform-aws-modules/eks/aws"

cluster_name = var.cluster_name

cluster_version = "1.18"

subnets = module.vpc.private_subnets

vpc_id = module.vpc.vpc_id

workers_additional_policies = [

"arn:aws:iam::aws:policy/AmazonS3ReadOnlyAccess"

]

worker_groups = [

{

ami_id = data.aws_ami.flatcar_pro_latest.image_id

pre_userdata = "mkdir -p /etc/docker"

instance_type = "t3.medium"

root_volume_type = "gp2"

asg_desired_capacity = 2

},

]

}

On top of the cluster, we'll need to configure at least the VPC, the kubernetes module, and the AWS region. There's a lot that we can customize, of course, but this is one possible configuration:

variable "cluster_name" {

type = string

default = "flatcar-cluster"

}

provider "aws" {

region = "us-east-1"

}

data "aws_availability_zones" "available" {}

module "vpc" {

source = "terraform-aws-modules/vpc/aws"

name = "${var.cluster_name}-vpc"

cidr = "10.0.0.0/16"

azs = data.aws_availability_zones.available.names

private_subnets = ["10.0.1.0/24", "10.0.2.0/24", "10.0.3.0/24"]

public_subnets = ["10.0.4.0/24", "10.0.5.0/24", "10.0.6.0/24"]

enable_nat_gateway = true

single_nat_gateway = true

enable_dns_hostnames = true

tags = {

"kubernetes.io/cluster/${var.cluster_name}" = "shared"

}

public_subnet_tags = {

"kubernetes.io/cluster/${var.cluster_name}" = "shared"

"kubernetes.io/role/elb" = "1"

}

private_subnet_tags = {

"kubernetes.io/cluster/${var.cluster_name}" = "shared"

"kubernetes.io/role/internal-elb" = "1"

}

}

data "aws_eks_cluster" "cluster" {

name = module.eks.cluster_id

}

data "aws_eks_cluster_auth" "cluster" {

name = module.eks.cluster_id

}

provider "kubernetes" {

host = data.aws_eks_cluster.cluster.endpoint

token = data.aws_eks_cluster_auth.cluster.token

cluster_ca_certificate = base64decode(data.aws_eks_cluster.cluster.certificate_authority.0.data)

}

Please note that to use these rules you'll need to have AWS credentials configured in your environment, for a user that has at least these permissions.

Running terraform init && terraform apply with the above rules creates the cluster with the Flatcar nodes attached. It also creates a kubeconfig_cluster-name file that we can use to interact with Kubernetes.

$ kubectl --kubeconfig kubeconfig_flatcar-cluster get pods -A

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system aws-node-6jqch 1/1 Running 0 92s

kube-system aws-node-fzkrb 1/1 Running 0 92s

kube-system coredns-c79dcb98c-8g5xs 1/1 Running 0 13m

kube-system coredns-c79dcb98c-rdrmb 1/1 Running 0 13m

kube-system kube-proxy-szqkp 1/1 Running 0 92s

kube-system kube-proxy-xfzpm 1/1 Running 0 92s

Check out the rest of our examples if you want to see more ways of using Flatcar in your workers.

Using eksctl

eksctl is the official CLI for EKS. It greatly simplifies managing our EKS clusters from the command line. To use it, you'll need to have credentials for a user that has at least these permissions.

To create a cluster with Flatcar workers, we'll use a cluster configuration file. In the file, we'll need to select an AWS region and the corresponding AMI of Flatcar Pro, that we need to look up, like we did for the AWS CLI. For example, for the us-east-2 region:

$ aws ec2 describe-images \

--owners aws-marketplace \

--region us-east-2 \

--filters "Name=name,Values=Flatcar-pro-stable*" \

--query 'sort_by(Images, &CreationDate)[-1].[ImageId,Name,CreationDate]'

With this value, we can create our eksctl-flatcar.yaml file:

apiVersion: eksctl.io/v1alpha5

kind: ClusterConfig

metadata:

name: flatcar-cluster

region: us-east-2

managedNodeGroups:

- name: flatcar-workers

ami: ami-02eb5584cab19ebbd

instanceType: t3.medium

minSize: 1

maxSize: 3

desiredCapacity: 2

volumeSize: 20

labels: {role: worker}

tags:

nodegroup-role: worker

iam:

attachPolicyARNs:

- arn:aws:iam::aws:policy/AmazonEKSWorkerNodePolicy

- arn:aws:iam::aws:policy/AmazonEKS_CNI_Policy

- arn:aws:iam::aws:policy/AmazonEC2ContainerRegistryReadOnly

- arn:aws:iam::aws:policy/AmazonS3ReadOnlyAccess

overrideBootstrapCommand: |

#!/bin/bash

mkdir -p /etc/docker;

/etc/eks/bootstrap.sh flatcar-cluster

With this file, we can call eksctl to create our cluster. It will take care of creating the cluster, the VPN, the launch templates, the security groups and so on.

eksctl create cluster -f eksctl-flatcar.yaml

This will take a while to complete. Once it's done, it will create a kubelet.cfg file that we can use to connect to the cluster:

$ kubectl --kubeconfig=kubelet.cfg get nodes

NAME STATUS ROLES AGE VERSION

ip-192-168-1-112.us-east-2.compute.internal Ready <none> 4m3s v1.18.9-eks-d1db3c

ip-192-168-38-217.us-east-2.compute.internal Ready <none> 4m3s v1.18.9-eks-d1db3c

$ kubectl --kubeconfig=kubelet.cfg get pods -A

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system aws-node-rbztz 1/1 Running 0 27m

kube-system aws-node-vc8qw 1/1 Running 0 27m

kube-system coredns-66bc8b7b7b-gvn2g 1/1 Running 0 32m

kube-system coredns-66bc8b7b7b-n889w 1/1 Running 0 32m

kube-system kube-proxy-8w75g 1/1 Running 0 27m

kube-system kube-proxy-9vrzp 1/1 Running 0 27m

We would like to have better integration with eksctl, which would allow us to simply specify the OS family instead of having to set the configuration file. We are working with the community to enable that option as well (see this github issue and vote on it if you'd like to see that added!).

You're ready to use your cluster!

We've covered a few ways in which we can add Flatcar workers to our EKS clusters. No matter how you decide to do this, your nodes are now ready to start running your Kubernetes workloads!

We'd love to hear how this experience goes for you.